Building a global TiddlyWiki hosting platform with Cloudflare Durable Objects and Workers — Tiddlyflare

Table of contents

- Context

- Requirements

- High-level architecture

- CreateWiki data flow

- GetWiki data flow

- Location hints

- OK. So what.

- Mindset shift

- Conclusion

Tiddlyflare is an open source TiddlyWiki hosting platform built with Cloudflare’s SQLite Durable Objects and Workers.

It supports multiple users, each with their own collection of TiddlyWikis, and each user’s data is automatically placed in a Cloudflare region close to them. Each TiddlyWiki hosted by Tiddlyflare can be up to 1GB (limits will be raised to 10GB soon).

This article goes into the architecture behind Tiddlyflare, and showcases the power and flexibility you get from Durable Objects (DO), and the Workers platform overall, without you really doing much more work.

You can find the actual source code for Tiddlyflare implementing everything described in this article at https://github.com/lambrospetrou/tiddlyflare. It works.

Context

Let’s introduce some useful background context.

TiddlyWiki

TiddlyWiki is a unique non-linear notebook for capturing, organising and sharing complex information —

tiddlywiki.com

TiddlyWiki is an amazing open-source tool created more than a decade ago by Jeremy Ruston, and as the quote above mentions can be used for note-taking, information and knowledge organization, and much more.

For the purposes of this article, you only need to know that by default each wiki stores all of its data in a single HTML file. 😅

When you load that HTML file into a browser you get a UI, and the data is embedded into the HTML file itself.

There are a million ways to persist your changes (see Saving wiki section for supported plugins), but in this article we will focus on the PutSaver saving mode.

Automatically saving changes with PutSaver

We will build a platform to host TiddlyWikis. While editing our wikis we want the changes to be automatically saved remotely on the platform.

Conveniently, TiddlyWiki has a very basic but super flexible API interface named PutSaver that we will implement (see PutSaver code).

PutSaver is very simple.

Once the TiddlyWiki HTML file is loaded in a browser, it sends an OPTIONS request to the current URL location, and based on the response it decides if PutSaver is supported.

If the response contains a header named dav with any value, and a success response status code (200 >= status < 300), then we are good to go.

From that point on, any changes you make to your wiki are automatically propagated with a PUT request to the current URL location and the request body is the whole HTML file.

Not only the changes, but the whole file!

We don’t care if this is efficient or not, that’s how PutSaver works, and that’s what we will use.

Durable Objects

I already wrote an article introducing Durable Objects a few weeks ago, so keeping this short.

Durable Objects (DO) are built ontop of Cloudflare Workers (edge compute). Each DO instance has its own durable storage persisted across requests, in-memory state, executes in single-threaded fashion, and you decide its location. You can create millions of them!

I said it before, and saying it again. Durable Objects is the most underrated compute and storage product by Cloudflare.

It’s so different than other platforms that it’s not easy to realize its power initially. Once you get it though, it’s a game changer! 🤯

Requirements

Let’s get started with the requirements of our platform.

- We want to support multiple users, each with their own collection of TiddlyWikis, and the usual CRUD functionality (create/read/update/delete).

- Each user’s data should be isolated from each other, i.e. user A only has access to their own TiddlyWikis.

- Each TiddlyWiki created on the platform gets its own URL, and visiting that URL responds with the latest version of the wiki HTML file.

- The PutSaver saving mechanism should be supported for all wikis by default.

- We want all user data to be close to the user’s location. A user in Portland has their data near Portland, and a user in London has their data close to London.

- Support scale (intentionally leaving this open-ended to your imagination limits).

Requirement 5 is where things get hairy, really fast, with traditional hosting/cloud/infrastructure platforms.

High-level architecture

There are many approaches to implement this and satisfy the requirements. Some are complex, others are complicated, and others are just a pain in the ass.

The following design exploits and showcases the goodies of Durable Objects, satisfying all the requirements, without the code getting complex or our mental reasoning having trouble.

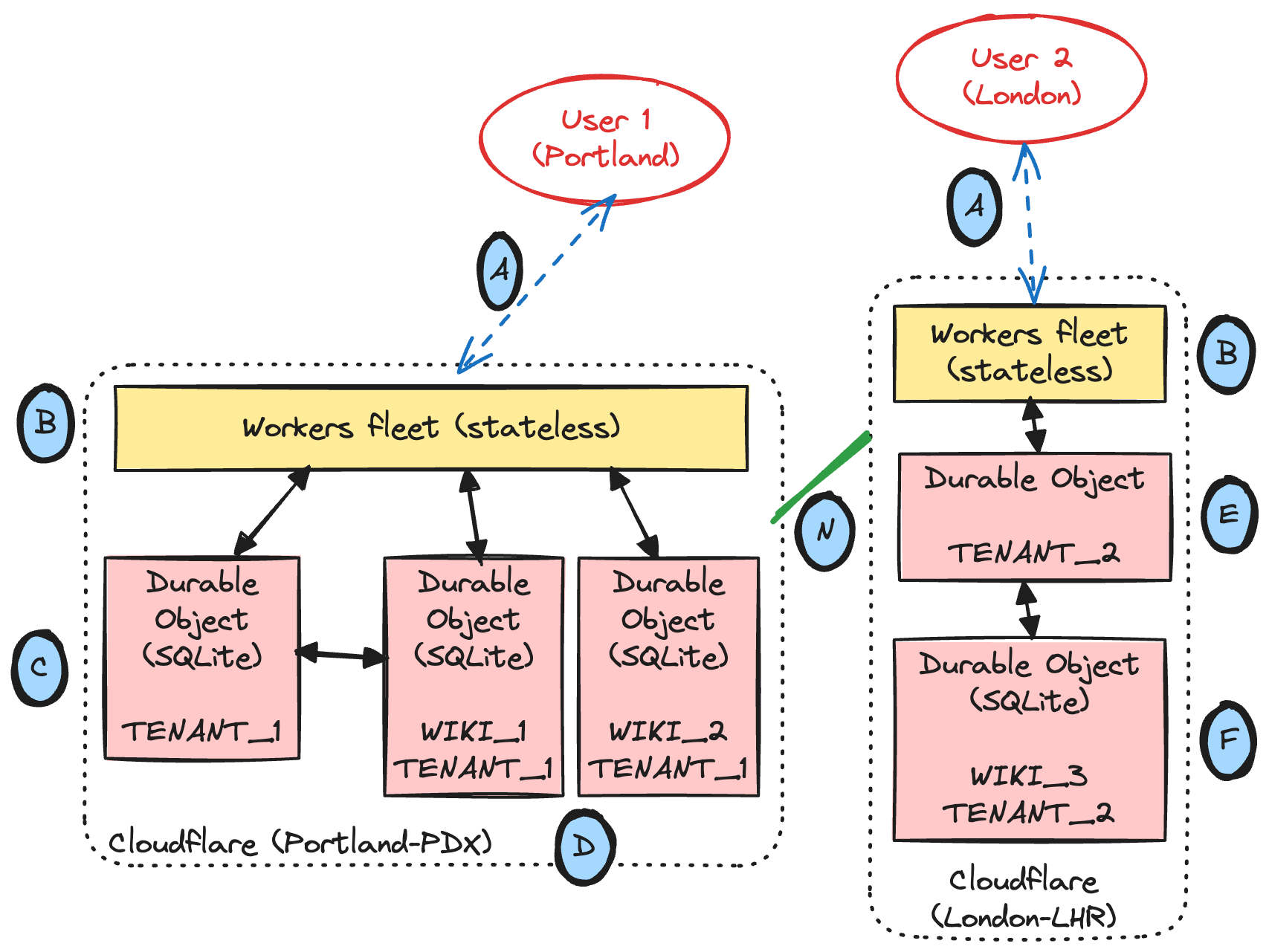

Let’s break down the above diagram.

- User 1

- First user is in Portland, US.

- User 2

- Second user is in London, UK.

- Traffic to Cloudflare network (A)

- The traffic from all users is routed to the nearest Cloudflare datacenter using Anycast.

- Workers fleet (B)

- The Workers are stateless, and each user request goes to any available machine inside a datacenter close to the user request’s location. Not necessarily the same machine each time.

- Durable Object with ID

TENANT_1(C)- A Durable Object (DO) instance is created the first time a user attempts to create a wiki, placed in a location near the user’s request, under the Durable Object Namespace

TENANT. TENANT_1is the specific DO instance created for User 1, and is placed inside a datacenter near the Portland region, close to User 1 location.- The tenant Durable Objects hold data about the user itself, and a metadata record for each wiki created for that user.

- The tenant Durable Object does NOT manage any of the wiki data (i.e. the wiki HTML).

- A Durable Object (DO) instance is created the first time a user attempts to create a wiki, placed in a location near the user’s request, under the Durable Object Namespace

- Durable Objects with IDs

WIKI_1andWIKI_2(D)- A Durable Object (DO) instance is created when a wiki is created, placed in a location near the

TENANT_1DO location, under the namespaceWIKI. WIKI_1andWIKI_2are the specific DO instances created for User 1’s wikis.- We are going to understand later why the

TENANT_1location is used here instead of the User 1’s location.

- A Durable Object (DO) instance is created when a wiki is created, placed in a location near the

- Durable Object with ID

TENANT_2(E)TENANT_2is the specific DO instance created for User 2 information, and is placed inside a datacenter near the London region, close to User 2 location.

- Durable Object with ID

WIKI_3(F)WIKI_3is the DO instance created for User 2’s wiki, near the location ofTENANT_2Durable Object instance.

- Cloudflare network (N)

- Each Worker and Durable Object instance can communicate with other instances across Cloudflare’s network without going to the public internet (most of the time).

- This can be used to efficiently communicate between DO instances, or other Cloudflare services.

CreateWiki data flow

Let’s now explore a concrete example of how data flows through the above diagram when User 1 creates their first wiki.

- User 1 opens Tiddlyflare (e.g. on tiddly.lambros.dev), logs in, and clicks the button to create a TiddlyWiki.

- The

POSTrequest triggered flows to the nearest Workers fleet within a datacenter in Portland. - The Worker code attempts to create a Durable Object Stub for User 1’s tenant ID.

- Since this is the first time we attempt that, there is no Durable Object instance with that ID, therefore the platform will create one in the closest datacenter with Durable Object support, often in the same region.

- The Worker code doesn’t need to check anything to see if a

TENANTDO already exists and worry about all that. When you create a reference to the DO you want to use, if it doesn’t already exist it gets created, and then you just get routed to it. - Worker code to get access a DO instance:

const doStub = env.TENANT.idFromName(tenantId).get(); - The above line will return a Durable Object Stub which allows us to call methods on the DO instance directly.

- This stub can reference a DO instance in the same datacenter, on the same machine, or in the other side of the world.

- Now that the

TENANT_1DO stub is created, we calldoStub.createWiki(...)to create the first TiddlyWiki. - The Durable Object

TENANT_1now receives the request, initiates its local SQLite storage with the appropriate SQL tables for the user information (remember; this is the first time the user did anything), and subsequently attempts to create the Durable Object that will manage the wiki’s data. - Similar to step 3, we now attempt to get a stub on the wiki DO

WIKI_1.- Generate a random ID for the wiki (i.e.

WIKI_1):const doId = env.WIKI.newUniqueId(); - Get the DO stub:

const wikiStub = await env.WIKI.get(doId); - Create the wiki:

await wikiStub.create(tenantId, wikiId, name, wikiType); - The

WIKI_1DO instance is created near theTENANT_1location because that’s the origin of the request to theWIKI_1DO instance.

- Generate a random ID for the wiki (i.e.

- The

WIKI_1DO instance initializes its own local SQLite database with the right tables to store wiki data, and stores the default TiddlyWiki HTML file. - The

TENANT_1DO instance receives the successful response fromWIKI_1, writes in its own SQLite database thatWIKI_1was created successfully, and returns the information about the newly created wiki back to User 1.- The URL of a wiki includes the

WIKI_1ID, therefore just having the URL is enough to be able to reference theWIKI_1DO instance without having to accessTENANT_1at all. - Alternatively, if you want to expose “names” instead of IDs through URLs, you can use the

idFromName(name)function to create your DO ID (see docs).

- The URL of a wiki includes the

Are you starting to see the magic? 👁️

GetWiki data flow

We have a wiki created now, so let’s see the much simpler wiki data read flow.

- User 1 opens the wiki URL returned by the creation flow.

- The

GETrequest flows to the nearest Workers fleet within a datacenter in Portland. - This time the Workers code attempts to create a Durable Object Stub straight to

WIKI_1, and bypasses theTENANT_1DO, since the URL encodes the DO instance ID.- Worker code to the wiki DO instance:

const doId = extractWikiId(requestUrl) const wikiStub = env.WIKI.idFromString(doId).get(); - As before,

WIKI_1is probably in the same region asTENANT_1or even same datacenter.

- Worker code to the wiki DO instance:

- The worker now calls the DO stub to return the wiki content.

- Code:

return wikiStub.getFileSrc(wikiId);

- Code:

- The

WIKI_1DO instance will wake up, if not already running, read from its local SQLite database the contents of the wiki HTML file, and stream it back to the Worker. - The Worker simply forwards the stream of the DO response back to User 1, without any intermediate buffering for no added overhead.

In summary, all GET requests for a wiki flow through the nearest worker to the user location, they then get routed to the corresponding

WIKIDurable Object instance holding that wiki’s data (could be on the other side of the world), and the content is streamed back to the user.

That’s it.

User 2 accessing User 1 wiki

Can you guess what the read flow looks like for User 2 trying to read WIKI_1 from User 1? For simplicity let’s assume all wikis are publicly accessible to anyone with the URL at hand (in reality we have actual auth).

Go ahead and guess which step of the previous section would be different.

OK.

The differences are steps 2 and 3.

The request from User 2 will go to the Workers fleet nearby User 2’s location, somewhere in London.

The WIKI_1 Durable Object instance doesn’t move after creation, therefore in step 3 the Worker will create a WIKI_1 DO stub that will reach out to the Portland region to access the WIKI_1 DO instance, and then continue with the wiki reading as usual.

Location hints

There was a subtle difference between step 3 and step 6 in the creation flow (User 1).

In step 3, the TENANT_1 Durable Object (DO) instance is created in the nearest datacenter to the user location. Whereas, in step 6, the WIKI_1 Durable Object instance is created in the nearest datacenter to the TENANT_1 DO instance’s location.

In general, the location considered when creating a Durable Object instance is the location of the running code that attempts to create the Durable Object Stub.

In step 3, the stub creator is the Worker code running closest to the user, whereas in step 6 it’s the code running inside the TENANT_1 DO instance.

In this specific example, both Durable Object instances are going to end up in the same region since they are all close by, maybe even the same datacenter/machine, but in other scenarios this might be different. See the example where User 2 attempts to access WIKI_1.

In cases where you want to influence the location of a Durable Object instance, ignoring the location of the stub creator, you can use Location Hints and provide explicitly the region you want to place the Durable Object instance.

let durableObjectStub = OBJECT_NAMESPACE.get(id, { locationHint: "eeur" });

// Supported locations as of 2024-Oct-22

wnam Western North America

enam Eastern North America

sam South America

weur Western Europe

eeur Eastern Europe

apac Asia-Pacific

oc Oceania

afr Africa

me Middle East

👉🏼 Tip: https://where.durableobjects.live is an amazing little website showing all the Cloudflare locations with Durable Object support.

OK. So what.

You might be wondering, apart from the fact that we used Cloudflare proprietary technology, why this, and not use a VPS, or AWS Lambda, or anything else really.

This is why I love Durable Objects and what made the Workers platform really click for me.

You are not tied down to a single location. It’s TRIVIAL to put data in any of the Cloudflare regions supporting Durable Objects. Create a Stub to the Durable Object you want, in the location you want, and get to work.

- Imagine having to write a Cloudformation stack to deploy across 10+ regions, and communicate within those regions from your application. All the configuration needed, managing all those regional endpoints. My god! 🤬

- For anyone saying skill issue right now, I worked at AWS. I have been using AWS for almost a decade. I have implemented CI/CD pipelines to deploy across all its regions (with AWS SAM, AWS CDK, Terraform).

- The difference is night and day. It doesn’t even come close!

Scale to hundreds, thousands, hundreds of millions of Durable Object instances.

- A Durable Object is a mini server with local, durable, actual disk storage (10GB).

- With just a name or ID, you summon a tiny server instantly at the location you want.

- No other platform allows you to horizontally scale so trivially. Not at this scale.

- Fly.io is in my opinion the only other platform that has a nice UX doing similar kind of horizontal scaling with their Machines API offering. However, even in that case, managing the exact location of each instance, creating and tearing them down instantly, referencing them by a constant name/ID throughout the whole lifetime of your application (e.g. across blue/green deployments), and doing all of that transparently within my code without a lot of boilerplate, is not as seamless as just creating a Durable Object Stub.

The Cloudflare Developer Platform.

- I only used Workers and Durable Objects for the whole Tiddlyflare product.

- There is so much you can do within the platform, and everything integrates with Workers through Bindings so nicely (and will get even more seamless).

- Workers KV, R2, Workers AI, Cache API, Rate Limiters, upcoming Containers, and so much more.

- Example: Adding a global cache to Tiddlyflare is simply

env.KV.put(wikiId, src). This will now allow fast reads from any Worker processing requests for thatwikiIdwithout having to even reach the DO instance. 1 line!

env.WIKI.get(extractDOID(wikiUrl)).deleteWiki(wikiId);

That’s all it takes. 🚀

Mindset shift

Having said all that, I want to point out an adoption issue with a platform like Workers and Durable Objects. We are transparent grown up adults, after all.

Durable Objects have been public for several years now, but most developers have no clue what they are, and what they can do.

That’s because it’s a radically different combination of compute and storage infrastructure product compared to existing platforms.

I mentioned before that someone familiar with any Actor programming model (Elrang, Elixir, Akka) will feel right at home with Durable Objects.

It’s the Actor model with infinite scale built natively into the global Cloudflare network itself.

In the end, this boils down to thinking about the main entities of your application as separate “things” that communicate with each other.

Each entity has their own memory, their own local disk storage, and their own lifecycle. In our example above, we have two main entities, the tenant (user information), and the wikis (versions of HTML content).

At the application level we have 1 TENANT instance per user, and unlimited amount of WIKI instances per user.

You could model all the wikis to be managed by a single Durable Object instance, or further merging the two and only having one Durable Object instance for each user with all their information including wiki content. 👎🏼

The problem with that design is that all requests and all operations for a user, including all the operations for all of their wikis, would be handled by a single Durable Object instance. What if a single wiki gets DDoSed and then blocks all others? What if the machine hosting that single Durable Object instance goes down? What if…?

Durable Objects are a very, very powerful programming model. However, a single Durable Object instance is a tiny server of 128MB memory and 10GB disk space. There is only so much it can do for you and requests it can handle (up to 1 thousand per second).

I previously described a bunch of other example cases and how Durable Objects can influence their architecture.

In summary, the main hurdle of adopting the Workers platform is that you need to start designing your applications with this isolation in mind.

What if every core entity in your application had its own mini server?

That’s Durable Objects.

Conclusion

You made it. Awesome. Thank you. 🙏🏻

I hope you now know what Durable Objects are, and understand why they are powerful.

If you want to use them in your applications, and have more questions, please, please reach out. If you have feedback to improve the platform, please reach out.